By: Ryan Day, Sr. Solution Architect, Dasher Technologies

Today I’m going to hone in on Network Storage Protocols and how they may (or may not) impact the performance, resiliency and efficiency of a VMware environment. I’ll start by briefly describing the protocols, compare them in a number of performance categories, then briefly touch on resiliency and VAAI (vStorage APIs for Array Integration) support before giving a summary conclusion.

The Choices:

iSCSI –Internet Small Computer System Interface (block)

NFS –Network File System (file)

FC –Fiber Channel (block)

FCoE –Fiber Channel over Ethernet (block)

The Rundown:

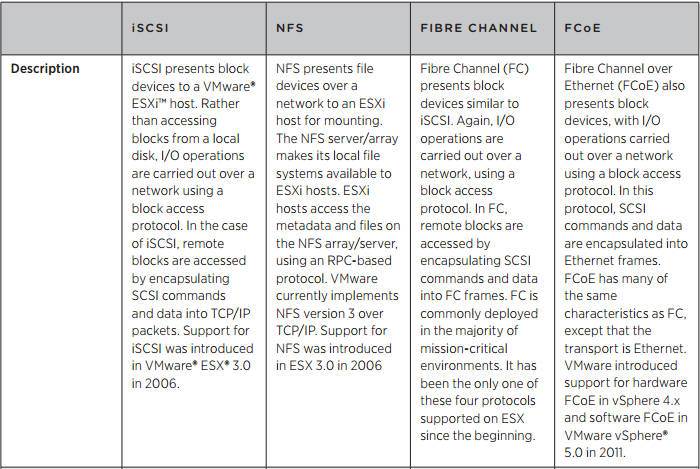

These protocols fall into two categories, file and block — which represent the type of IO between the client and storage. Here is a table describing each protocol:

Image Source: VMware Storage Protocol Comparison White Paper

Image Source: VMware Storage Protocol Comparison White Paper

PERFORMANCE:

In this section I’ll be comparing the four network storage protocols above in four performance categories; latency, two types of throughput –IOPS and MB/s a well as CPU overhead incurred by the use of a specific protocol on the VMware ESX host.

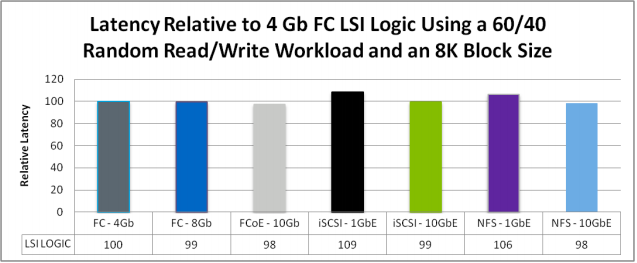

Latency:

Latency (round trip) is the measure of time between when one network endpoint sends information to another endpoint and receives an acknowledgement in return.

This is an extremely important performance characteristic for some applications, most notably online transaction processing (OLTP) databases and e-commerce web servers. It’s relevance to a VMware environment is largely dependant on the application, but VMware does recommend maintaining an average latency below 30ms between a VM and its virtual disk.

The following graph compares latency (lower is better) using the protocols listed relative to 4Gb FC (Fiber Channel):

Image Source: NetApp TR-3916

Image Source: NetApp TR-3916

Observed average latencies were within 4% of that observed using 4Gb FC, so we can see that latency variances between the protocols are very tight.

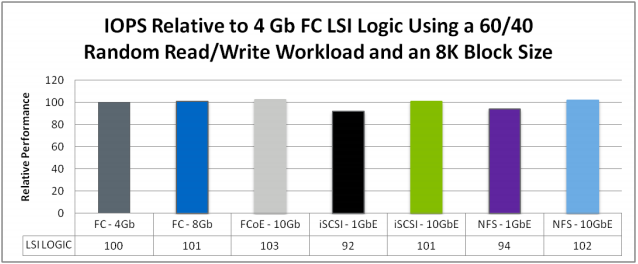

Throughput — IOPS

The term IOPS refers to the number of input/output operations per second that can be achieved with a given device, network or system.

In the case of shared storage arrays this is can be a crucial performance characteristic, primarily because it is a shared resource with potentially thousands of concurrent connections which could each be performing any number of operations per second.

This graph compares IOPS (higher is better) using the protocols listed relative to 4b FC (Fiber channel):

Image Source: NetApp TR-3916

Image Source: NetApp TR-3916

Results show that all protocols using larger than 1GbE network links yielded performance within 3% of 4Gb FC and that protocols using 1GbE network links generated performance within 6-8% of 4Gb FC. Very tight variances here as well.

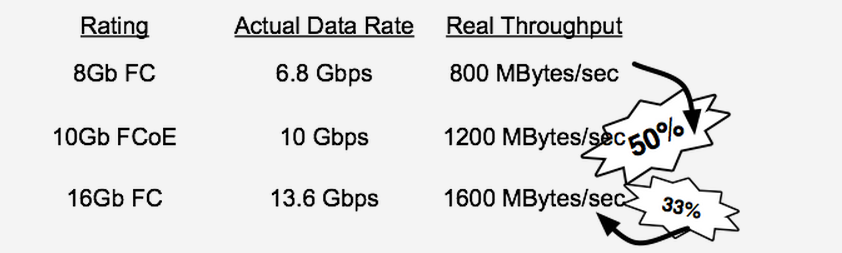

Throughput –MB/s

Often referred to as bandwidth, this is the measure of how many bytes of data you can transmit per second. However, there is always overhead preventing you from achieving line rate speeds (i.e. 4gbps over 4G FC).

If you’re looking at a choice between implementing a Fiber Channel network vs a 10g Ethernet network, consider this rough chart with some factoring of transport layer overhead:

Image Source: Cisco blogger J Metz

Image Source: Cisco blogger J Metz

The reason Ethernet has much lower overhead than FC is because of how the traffic is encoded –TCP / IP has a more efficient encoding mechanism than fiber channel does and adds very little bandwidth overhead, especially if Jumbo Frames (9000+ MTU) are in use.

Protocols using ethernet have an advantage in this category, but this may not be a bottleneck in your VMware environment if you’re using 10gE or 8G FC (hint: it usually isn’t). At this point most storage devices don’t yet support 16G FC.

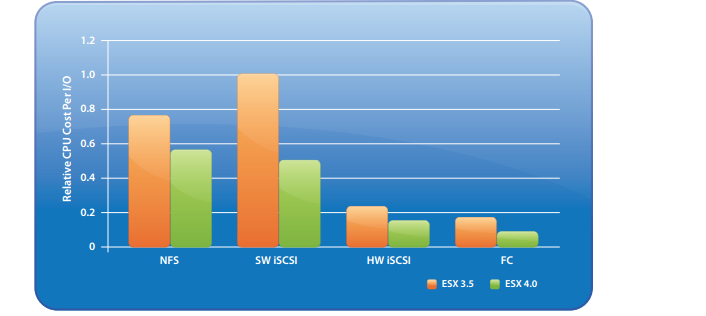

CPU Overhead:

The CPU cost of a storage protocol is a measure of the amount of CPU resources used by the hypervisor (ESX) to perform a given amount of I/O.

There are I/O cards specifically designed to offload the computational burden of some of these protocols from the server’s CPU to an ASIC specifically engineered for use with a particular protocol. One example is a FC HBA (host-bus adapter), another would be an iSCSI HBA, which is an ethernet NIC specifically engineered for use with the iSCSI protocol.

Notice both HW and SW iSCSI in this CPU overhead graph (lower is better):

Image Source: Comparison of Storage Protocol Performance in VMware vSphere™ 4 White Paper

Image Source: Comparison of Storage Protocol Performance in VMware vSphere™ 4 White Paper

FCoE is lacking from the graph but would perform similarly to HW iSCSI.

Protocols which support CPU offloading I/O cards (FC, FCoE & HW iSCSI) have a clear advantage in this category. And though the relative difference here is dramatic, the overall effect of the overhead could be nominal. However if your VMware workload is extremely CPU intensive, this could become decisive.

RESILIENCY:

With the use of MPIO (multipath IO) in the case of a block protocol, or LACP (link aggregation control protocol) in the case of NFS, all of these storage protocols can be implemented with a high degree of resiliency.

FEATURES:

VAAI (VMware vStorage API Array Integration):

VAAI is a feature introduced in ESX(i) 4.1 which works in conjunction with a storage array to provide hardware acceleration.

Primarily this means CPU offloading from the ESXi host to the storage array:

Image Source: TechRepublic

Image Source: TechRepublic

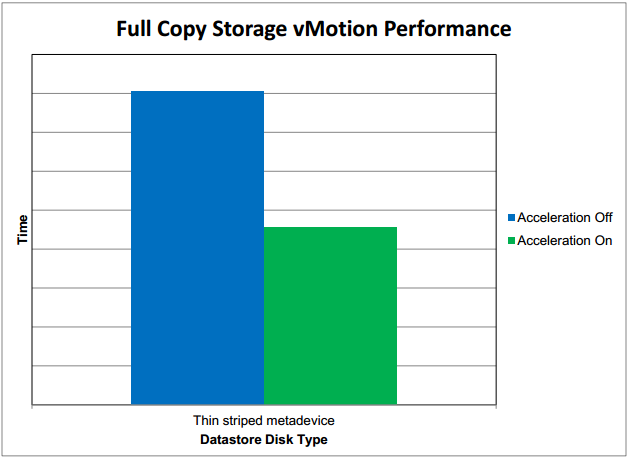

And because data does not have to be transmitted up to the ESX host, then back down to the array and a second copy written out to disk, this allows certain storage related vSphere functions (Storage vMotion for example) to be performed much more quickly and efficiently:

Image Source: Using VMware vSphere Storage APIs for Array Integration with EMC Symmetrix White Paper

All four protocols discussed support VAAI, with more variance between storage platforms than the network storage protocols themselves.

CONCLUSION:

These are all robust, high performing protocols which can be resiliently implemented and have ample support from VMware. Choosing one will depend more on existing infrastructure, expertise within an organization, workload characteristics and perhaps most importantly their implementation by an array vendor, than globally differentiating merits of the protocols themselves.

When selecting a storage array, VAAI support can be paramount in achieving efficiencies at the hypervisor CPU as well as the storage network. And in terms of performance, implementing flash or improving netswork latency and throughput as part of a storage solution will far outweigh gains made through the selection of these protocols.

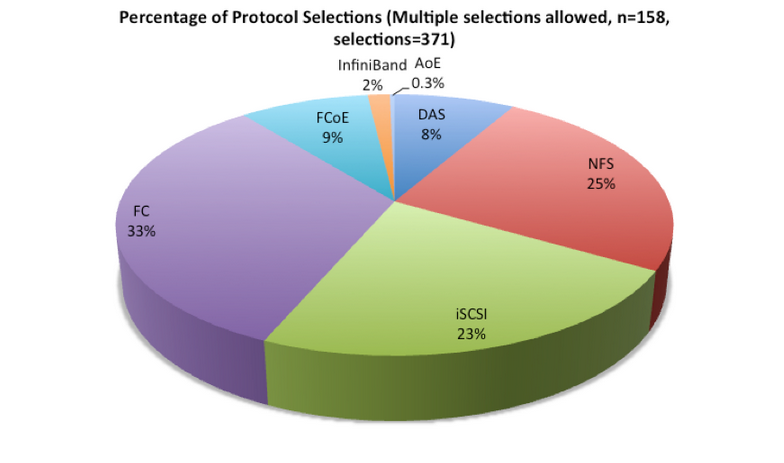

As further evidence of their mutual viability, here are protocol selection survey results from a subset of the VMware vSphere install base:

Image Source: Wikibon 2012, from Survey July 2012, n=158

Finally, it’s important to state that data I show here is widely subjective, and can vary greatly depending on the storage, systems, network, io devices (i.e. HBA or NIC), applications and their configuration.

REFERENCES:

VMware:

VMware Storage Protocol Comparison White Paper

Comparison of Storage Protocol Performance in VMware vSphere™ 4 White PaperWhat’s New in Performance in VMware vSphere™ 5.0 White Paper

VMware vSphere Storage APIs – Array Integration White Paper

VMware FAQ for vStorage APIs for Array Integration

NetApp:

VMware vSphere 4.1 Storage Performance: Measuring FCoE, FC, iSCSI and NFS Protocols White Paper

EMC:

Using VMware vSphere Storage APIs for Array Integration with EMC Symmetrix White Paper

Cisco:

Cisco Blog > DataCenter and Cloud

TechRepublic:

VAAI should be a requirement in your next storage array

Wikibon:

VMware vSphere 5 Users Move Beyond the Storage Protocol Debate