By: Bill Jones, Sr. Solution Architect, Dasher Technologies

In today’s blog post, I will be covering Intel Hyper-Threading Technology, how it can benefit system performance, and when it might not. I will also provide a simple method for creating a rough comparison of system performance between hyper-threaded CPUs and non-hyper-threaded CPUs.

SYNOPSIS:

Intel Hyper-Threading Technology can boost processing performance of a system by up to 30%. Hyper-threading creates two logical processors from one physical processor core. It does so by providing two sets of registers (called architectural states) on each core. When hyper-threading is enabled on an Intel socket, the second architectural state on each core can accept threads from the operating system (or hypervisor). These two threads will still share internal microarchitecture components called execution units. This can result in up to 30% more processing performance in a single socket system. In dual socket systems, hyper-threading can provide up to a 15% improvement. If performance tuning is critical in your environment, testing performance both with and without hyper-threading could be important.

TECHNICAL DETAILS

Below is the how and why of hyper-threading…

What is Hyper-threading?

Hyper-threading is an Intel technology which provides a second set of registers (i.e. a second architectural state) on a single physical processor core. This allows a computer’s operating system or hypervisor to access two logical processors for each physical core on the system.

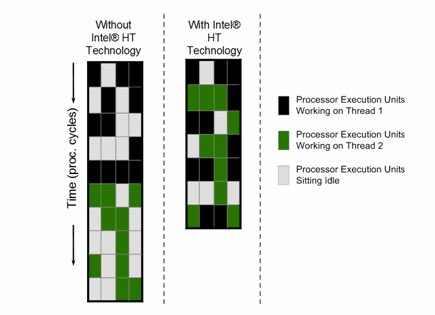

Image source: Intel

As threads are processed, some of the internal components of the core (called execution units) are frequently idle during each clock cycle. By enabling hyper-threading, the execution units can process instructions from two threads simultaneously, which means fewer execution units will be idle during each clock cycle. As a result, enabling hyper-threading may significantly boost system performance.

How Much will Hyper-Threading Boost Processing Performance?

Based on guidelines from multiple server manufacturers, I use the following rule of thumb. For a single socket system, hyper-threading can boost system performance by up to 30%. For dual socket systems, hyper-threading can boost performance by up to 15%. For quad-socket (or higher) systems, performance testing with and without hyper-threading enabled is recommended.

In the diagram below, we see an example of how processing performance can be improved with Intel HT Technology. Each 64-bit Intel Xeon processor includes four execution units per core. With Intel HT Technology disabled, the core’s execution can only work on instructions from Thread 1 or from Thread 2. As expected, during many of the clock cycles, some execution units are idle. With Hyper-Threading enabled, the execution units can process instructions from both Thread 1 and Thread 2 simultaneously. In this example, hyper-threading reduces the required number of clock cycles from 10 to 7.

Image source: Intel

Fortunately, modern operating systems and hypervisors are hyper-threading aware and will load active threads evenly across physical cores. As a result, the early problems with performance tuning multi-core systems with hyper-threading enabled have been mostly eliminated. However, if CPU affinity is required in your environment, please consult the relevant documentation for your operating system or hypervisor. For example, the VMware Performance Best Practices for VMware vSphere 5.5 states:

“Be careful when using CPU affinity on systems with hyper-threading. Because the two logical processors share most of the processor resources, pinning vCPUs, whether from different virtual machines or from a single SMP virtual machine, to both logical processors on one core (CPUs 0 and 1, for example) could cause poor performance.” via VMware

PERFORMANCE

One of the largest challenges with understanding the performance improvements from hyper-threading is how processor performance is reported by monitoring tools. Let’s take a quick look at how Microsoft Windows and VMware vSphere report CPU usage. A detailed look at performance monitoring could easily fill several blog posts, so we’ll keep this part of the discussion simplified for now.

Understanding Windows Performance Reporting with Hyper-Threading

Microsoft Windows reports “% Processor Time” by calculating the percentage of time that logical processors executed idle threads (during the reporting interval) and subtracting that amount from 100%. Since Microsoft Windows is hyper-threading aware, the operating system will only use the second architectural state on a physical processor core when there are more active threads than there are physical cores in the system. However, Windows performance monitoring tools will still report both architectural states as logical processors within the operating system.

Now, let’s combine these two facts and see how performance reporting changes when hyper-threading is enabled. If the Windows server has two 8-core Intel Xeon processors and hyper-threading is disabled, then Performance Monitor (PERFMON.EXE) will report 16 logical processors. If the % Processor Time for the “_Total” instance is consistently between 40% and 50%, then 6 to 8 active threads (on average) are executing each clock cycle.

If we enable hyper-threading on this server, Windows will now report 32 logical processors (two logical processors per physical core). Since Windows is hyper-threading aware, those 6 to 8 active threads will still be distributed (on average) to one active thread per physical core, and the second architectural state on each core will go largely unused. In this example, enabling hyper-threading has neither improved nor impaired system performance. However, Performance Monitor now averages that 6-8 active threads across 32 logical processors. As a result, the % Processor Time value is cut in half. So, it looks like enabling hyper-threading has doubled system performance! Unfortunately, (as discussed earlier) the second architectural state on each core has to share microarchitecture components with the first architectural state, and the system’s performance capacity has not actually been doubled.

So, with the Windows Performance Monitor tool, it is important to remember that with 0%-50% CPU load, hyper-threading is not regularly leveraged by the system. Also, the journey from 50%-100% is a lot shorter than the distance from 0%-50%.

Understanding VMware vSphere Performance Reporting with Hyper-Threading

Modern hypervisors (like VMware ESXi 5.x) are hyper-threading aware. VMware states, “ESXi hosts manage processor time intelligently to guarantee that load is spread smoothly across processor cores in the system… Virtual machines are preferentially scheduled on two different cores rather than on two logical processors on the same core.” (source: “vSphere Resource Management, ESXi 5.5 vCenter Server 5.5.” via VMWare

As a best practice, VMware recommends that hyper-threading be enabled. In its performance best practices whitepapers for both ESXi 4.x and 5.x, VMware states, “…hyper-threading can provide anywhere from a slight to a significant increase in system performance by keeping the processor pipeline busier.” (sources: http://bit.ly/1muhaOA, http://bit.ly/1dVMe99)

Within VMware, CPU resources are usually reported in megahertz (MHz). Three of the key metrics for CPU utilization are “usagemhz,” “totalmhz,” and “usage.” The specific definitions of the metrics change depending on whether the context is a VMware vSphere datacenter, a cluster, a resource pool, a host, or a virtual machine. Here is a general description of the three metrics.

usagemhz: measures (in MHz) compute resources consumed

totalmhz: measures (in MHz) compute resources available

usage: reports (as a percentage) usagemhz divided by totalmhz

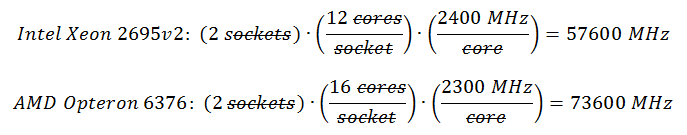

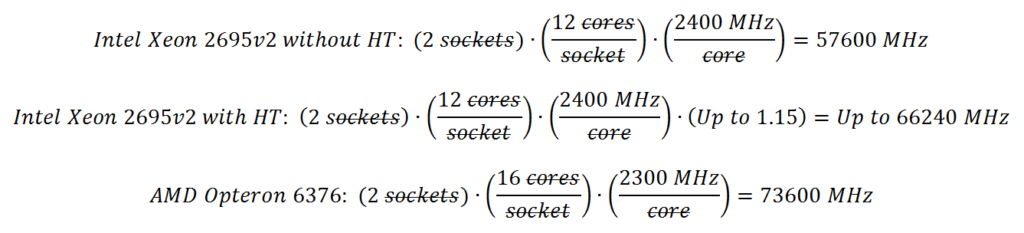

Totalmhz for an ESXi host is calculated by multiplying the number of physical cores in the host by the clock speed of those cores. Below is a demonstration of the calculations of two dual-socket servers – one with the Intel Xeon 2695v2 processors and one with AMD Opteron 6376 processors.

The VMware capacity calculation does not take into account the potential benefits of hyper-threading, so it could underestimate the CPU performance capacity of a host.

Usagemhz represents the active CPU usage within the context. Usage is reported as a percentage and is calculated by dividing usagemhz by totalmhz.

When is Hyper-Threading Not a Good Idea?

Sometimes, hyper-threading is not beneficial to system performance. In extreme cases, enabling hyper-threading could reduce system performance. Below is a brief list of environments where hyper-threading may return little or no improvement. If your environment includes systems that meet these criteria, testing system performance with and without hyper-threading enabled is recommended.

Hyper-threading should be tested if:

- the server has more than two sockets,

- the server has a large number of physical cores,

- the operating system is not hyper-threading aware (example: Windows Server 2003),

- the application is single-threaded or does not handle multiple threads efficiently,

- the application is already designed to maximize the use of the execution units in each core, or

- the application has a very high rate of memory I/O.

SIMPLE AND ROUGH COMPARISON

As promised, here is a simplified and very rough method for comparing CPU performance with and without hyper-threading. This model does not take into account many other significant factors that can improve system performance – factors like processor caches, processor generation, hardware virtualization assistance, memory speed, etc. So, if you’re going to use this model, please remember that it provides a back-of-the-napkin, very-very-simplified, very-rough comparison of CPUs.

Leveraging the VMware model for calculating CPU capacity in MHz, we will add a “hyper-threading factor.” The hyper-threading factors I use are “up to 1.3” for single socket systems and “up to 1.15” for dual socket systems. With that in mind, let’s recalculate the processor performance capabilities of the Intel Xeon 2695v2 and the AMD Opteron 6376 processors. For this exercise, the processors will be installed in dual-socket servers.

As we can see, the Intel Xeon 2695v2 with hyper-threading can boost system performance from 57,600MHz to “up to” 66,240MHz. Depending on the environment, this 15% potential improvement could be significant.

CONCLUSION

Generally speaking, hyper-threading is a good thing. Many manufacturers recommend enabling hyper-threading as part of their best practice guidelines. In fact, many administrators have used hyper-threading for many years without incident. However, as we’ve seen, hyper-threading is a “your mileage may vary” technology.

Hyper-threading has come a long way since it was first released in 2002. Much of that improvement is due to improved hyper-threading support in operating systems and hypervisors. If you’re among those who were burned by hyper-threading in its early days, I invite you to try it again.

Intel Hyper-Threading Technology offers a potential improvement to system performance, and it may be available in the hardware that you already have.

(For this blog post, we greatly simplified the descriptions of how hyper-threading aware operating systems and hypervisors balance load across sockets, cores, and logical processors. For a very detailed description of VMware CPU scheduling, please see “The CPU Scheduler in VMware vSphere 5.1”, Here)