Forward by Chris Saso, CTO & Content and Blog by Isaack Karanja, Senior Solution Architect

Good things never really die, they just come back again decades later…

When I went to college way back in the 1980’s and was studying Computer Science you’ll never guess what was all the rage: Artificial Intelligence (AI) and Neural Networks. Today, my son is a sophomore CS student and guess what, there are classes available in AI and Neural Networks. Dasher is working with many of our clients on building HPC Clusters with GPU technology to train AI environments and learning systems. This is such a great time to be in technology!

When I joined Dasher in 2003, Dasher was selling HP/UX Systems. HP/UX had a couple physical and virtual OS features called Npars and Vpars. Npars allowed a user to carve up physical servers from one larger server, which is what the new HPE Synergy solution is doing today. Vpars were essentially equal to what VMWare ESX has done for X86 servers, virtual partitioning of physical hardware into logical OS machines. Add to the mix the great solution from Citrix for virtualizing applications and it is not a stretch to see that containers is the next logical progression in application workload virtualization. What I think you will realize is that containers are an evolution of a number of technology areas and when described in the context of where technology has been, it is easy to see that it is a logical progression of how applications should be managed.

I will let Isaack take it from here as he introduces the concept of containers and some of the major technologies in this space.

Container Platforms

Containers is a word that is loosely thrown around these days, a lot like DevOps. Let us start by briefly looking at what benefits we get by using containers.

Benefits of Containers

- Small Image – you don’t need to package the OS binaries with the application

- Fast Startup – apps start quickly because there is no OS to load first

- Efficient – you can run more containerized apps vs. VMs on the same CPU/memory since each container does not have an OS image

- Portable – containerized apps can be easily migrated between physical servers, private cloud and public cloud

- Scalable – because of Efficiency and Portability scaling up/down is faster and simpler

- Consistency – Containers provide consistency across development, testing and production environments

- Loose Coupling – Containers provide loose coupling between the application and operating systems

- Timing – Containers came at a time when it was considered very important to support agile development and operations (DevOps)

- Security – Containers are more secure since the OS is the major attack service and since you manage fewer OS’s you reduce the number of places that patches are required

Before we go to into exactly what containers are, let’s briefly examine container history. The first thing that you’ll notice is that containers have been with us for a long time.

- 1972 UNIX chroot (added to BSD in 1982)

- 2000 FreeBSD Jails (added Filesystems, users, networks)

- 2005 OpenVZ (Filesystems, users/groups, process tree, networks, devices, IPC)

- 2006 Process containers (Linux Kernel 2.6.24 – limit CPU, memory, disk, network IO)

- 2008 Control Groups (Cgroups to the Linux Kernel)

- 2008 LXC (Linux Containers, CLI, language bindings for 6 languages)

- 2011 Warden, CloudFoundry

- 2013 LMCTFY (Let me contain that for you) Google Talks

But containers really became of age and mainstream because of events at a startup company called DotCloud (Docker).

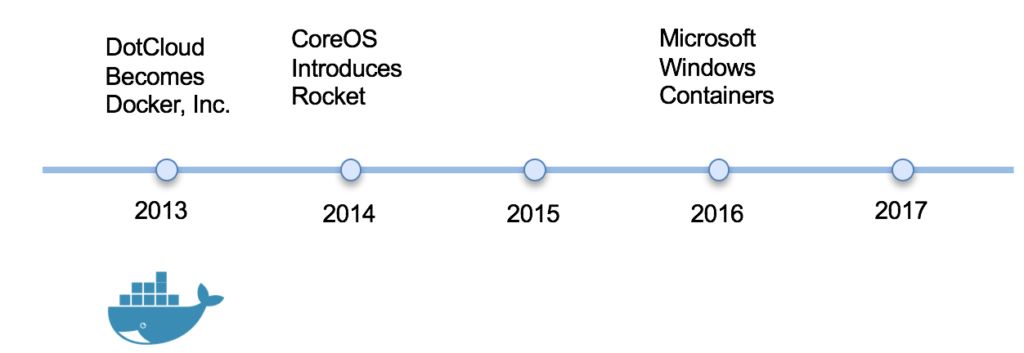

Docker Timeline

In 2013, DotCloud was a service (PAAS) company that provided a product that allowed developers to build and run applications without worrying about the underlying infrastructure. DotCloud’s PAAS product was not popular and never took off. DotCloud’s customers on the other hand were very interested in the the underlying technology called Docker which DotCloud later open-sourced. As Docker open-source technology took off, DotCloud the startup company pivoted to focus on Docker and changed its name to Docker Inc.

So what did Docker do differently that allowed containers to become so popular, so much so that containers are synonymous with Docker? To understand that, you need to know what all container systems have in common and what they are made of.

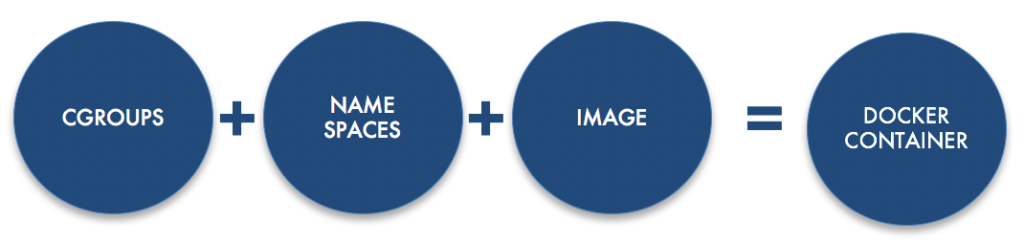

Containers are made of two specific technologies:

Linux Cgroups

Linux Control Groups (also known as Cgroups) are a Linux kernel feature that limits, accounts for, and isolates the resource usage of a collection of processes.

Some key things to note:

- Cgroups are implemented as a kernel feature

- Cgroups are used to group processes

- Control provide limits for resource allocation

- CPU, Memory, Disk, Network IO

- Cgroups can be nested (ie. you can have one cgroup underneath another cgroup)

Linux Kernel namespaces

namespaces are a feature of the Linux kernel that isolate the system resources of a collection of processes.

Some key things to note:

- Linux namespaces are also a kernel feature

- They provide a restricted view of the kernel (operating system)

- Network, Filesystem, PIDs, Mounts, Users including Root, UTS – hostname etc

- Linux kernel namespaces can also be nested (ie. you can have one namespace underneath another namespace)

This is all there is to Linux containers. Let us quickly contrast the container building blocks vs Virtual Machines.

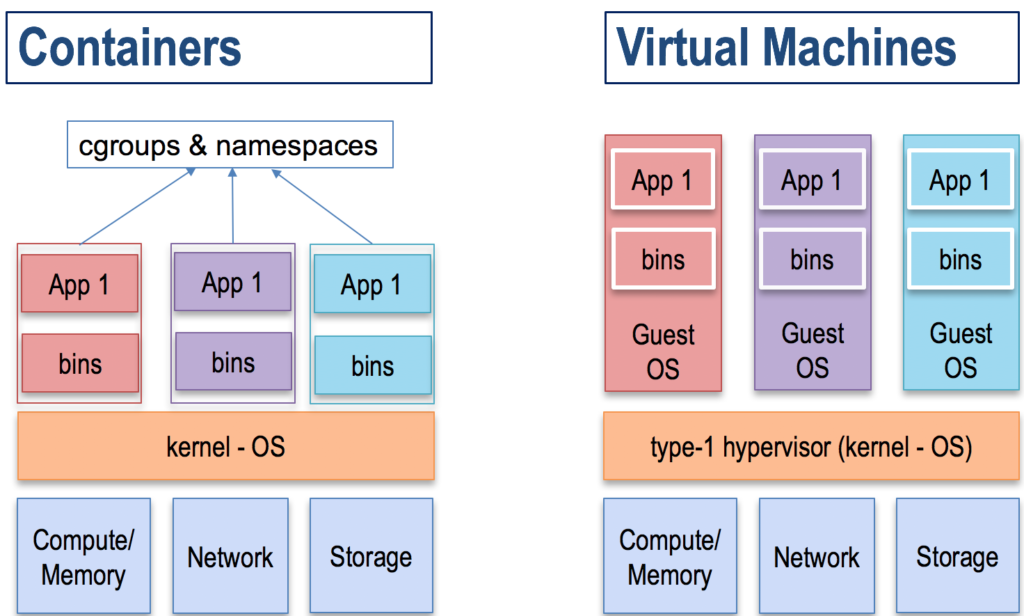

Containers vs Virtual Machines

The diagram below shows containers running on bare metal vs virtual machines running on bare metal. In both of these deployments, there is isolation between the red, purple and green applications but the isolation is enforced differently. On the containerised applications, isolation is enforced through the use of Cgroups and namespaces. On the virtual machines, isolation is established by giving each application its own copy of a guest operating system.

By giving each process its own namespace and isolating it using Cgroups, the containerized applications are faster, more efficient and more secure. Some key things to note:

- In a type-1 hypervisor (where the hypervisor is part of the kernel), the hypervisor sits on the execution path of the instructions from the application. The hypervisor is responsible for providing virtualized hardware to the guest OS and translates all the instructions it receives from the guest’s OS to machine code. This introduces overhead and the overhead is made even worse when using nested virtualization or type-2 hypervisors.

- In a containerized application, Cgroups and namespaces only control the process startup and the process teardown. During application execution runtime, containerized applications interact directly with the kernel since Cgroups and namespaces are not in the execution path. This results in the containerized applications having:

- Shorter startup time (measured in seconds or minutes for virtual machines)

- Faster execution of containerized applications instruction sets versus sending it through the hypervisor

- Since there containerized applications are more efficient, you can run more of them on the same CPU/memory/networking resources.

- There is no performance penalty when you nest containers again because Cgroups and namespaces only control startup and teardown information for the containerized application process.

- It is possible to provide the virtualized guest operating system with the same application-to-hardware efficiency. This is accomplished by bypassing the hypervisor and presenting the hardware to the guest’s OS kernel using PCI passthrough. Unfortunately this negates the benefits of virtualization and one loses key virtualization features such as vmotion.

- In VM environments, each VM has its own copy of the guest’s operating system. This means that a large amount of data that is coupled to the application is the operating system. Since containers all share the same kernel, all you have to move is the application’s specific information which is generally just a few MB in size. This makes containers highly portable. In order to run a containerized application, all you need is a Linux kernel that supports Cgroups and namespaces. As of Docker 1.3, in of Sep 2017, Docker’s minimum Linux kernel supported version is 3.10.

What makes Docker so special?

You might have noticed that Cgroups and namespaces were there long before Docker came along, so what makes Docker so special to a point where the name Docker became synonymous with containers?

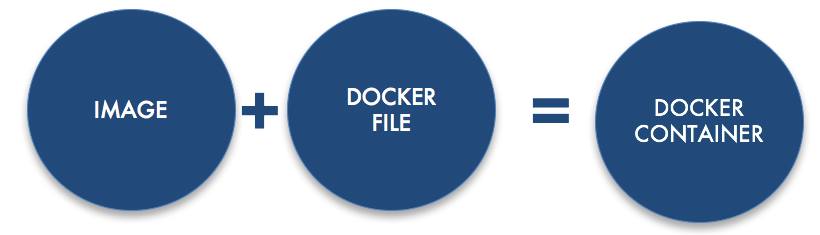

Along with Docker container specs, Docker also introduced a way to package and easily share containers. Docker introduced the Docker container image.

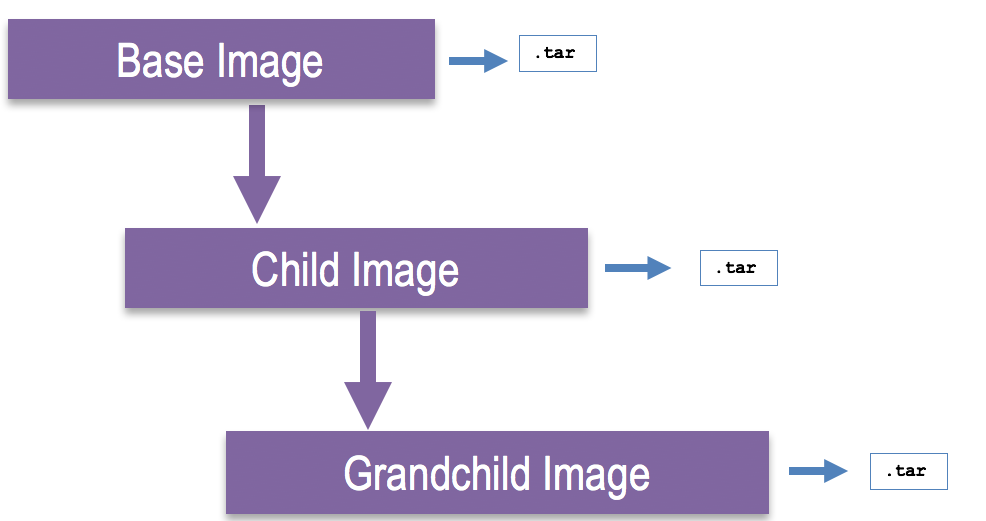

A Docker container image, is a tar file that is built based on a nesting relationship of containers. A container is essentially a snapshot of a running container rolled into a tar file.

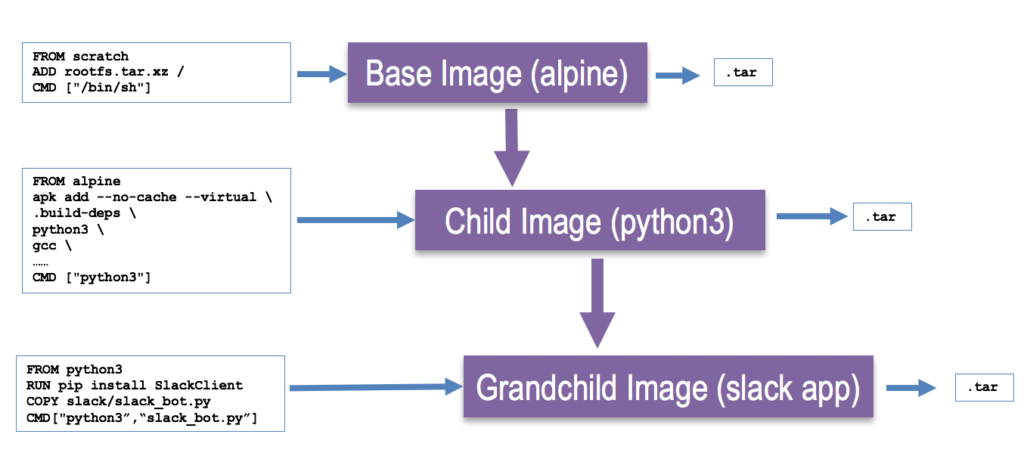

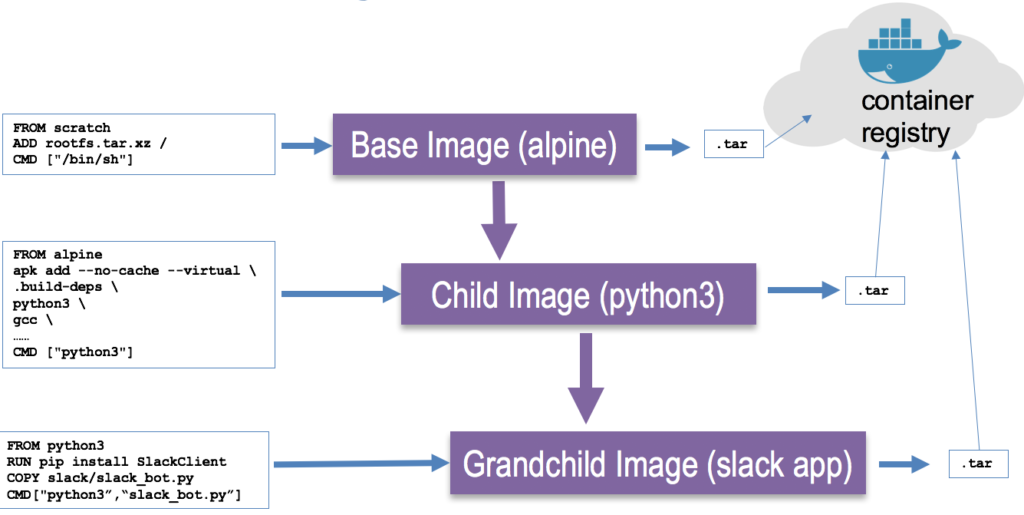

A container image is built off a Dockerfile. A Dockerfile contains all the instructions for building a single image and allows you to extend a Docker image. A Docker file starts with a FROM statement which references the parent Docker image. Building a Dockerfile results in an image tar file.

If you examine the Dockerfile of the of the initial base image, you will find that they reference “scratch” image which just points to the Linux kernel.

In addition to the container image, Docker also introduced the concept of a container registry. A container registry is a place where you store all your tar files (container images). The initial registry being Docker Hub.

Once containers had images and a container registry, they now had full lifecycle management. This is the container lifecycle:

- User codes their application and puts it in a Dockerfile (for example in their local workstation installed with Docker). This would be become a grandchild application image.

- User builds the Dockerfile to create a tar file (Docker image) that would be pushed to a Docker registry.

- User moves the image to another machine (for example a production server) with a Linux kernel and Docker installed.

- User runs the Docker container from grandchild application image in a new machine. As long as Docker had a connection to the container registry, Docker could pull all the layers necessary to build the full running application.

While docker has emerged as the defacto container engine and containers have almost become synonymous with Docker, there are other container platforms.

- Rkt supported by a company called CoreOS (biggest competitor to Docker)

- LXC/LXD from the Linux Community

- LXC/LXD is designed to run full system containers (run full operating systems)

- OpenVZ for Linux Community. Supported by a company called Virtuazo

- Designed to run full system container

- runC, containerD, systemd–nspawn

That’s a lot of information to start your journey to containers, so I am going to break up my blog posts into a couple of posts. In the next post, I will delve into more topics such as orchestration engines, scheduling, service discovery, load balancing and Kubernetes.

Further reading: Containers 102: Continuing the Journey from OS Virtualization to Workload Virtualization